BodyCoM

Rethinking Dynamic Whole-body Multicontact Interaction: Towards Next Generation of Collaborative Robots

Humans have an amazing ability to manipulate various objects by exploiting physical contact. Can a robot achieve better object manipulation if it learns to exploit physical interactions with multiple contacts, such as bracing its elbows against the environment? Humans do the same, for accurate manipulation they tend to brace their forearms against the environment. In robotics, this way of using the environment to improve manipulation performance has not been explored before, primarily because robots were rigid and did not allow or require whole-body contacts. However, the advent of new lightweight structures and torque sensors has led to the rapid proliferation of collaborative robots in many different fields. Additional research is needed to bring their capabilities in line with what is typically desired and expected from robots, especially in high accuracy tasks. The goal of BodyCoM is to explore how multi-contact interactions can improve the performance of a new generation of robots through several innovative phases of research and development. First, we will analyse how humans use the environment to improve their skills in accuracy and endurance. Second, we will extend the control components to enable whole-body contact. Third, we will develop a novel motor-primitive control architecture to enable goal-directed multi-contact interaction that accelerates the learning of compliant behaviours. With the consolidation of robotics methods developed at the host department and the expertise of PI in physical human-robot interaction, BodyCoM has a unique predisposition to break new ground in the cognitive exploitation of environmental contacts and constraints. The expected project outcome will demonstrate how robots use whole-body multi-contact interaction for efficient and precise manipulation.

Publications

Journal Articles

A Geometric Approach to Task-Specific Cartesian Stiffness Shaping Journal Article

In: Journal of Intelligent and Robotic Systems: Theory and Applications, vol. 110, no. 1, 2024, ISSN: 15730409.

Kinematic model calibration of a collaborative redundant robot using a closed kinematic chain Journal Article

In: Scientific Reports, vol. 13, no. 1, pp. 1–12, 2023, ISSN: 20452322.

Kinematic calibration for collaborative robots on a mobile platform using motion capture system Journal Article

In: Robotics and Computer-Integrated Manufacturing, vol. 79, pp. 102446, 2022, ISSN: 0736-5845.

Proceedings Articles

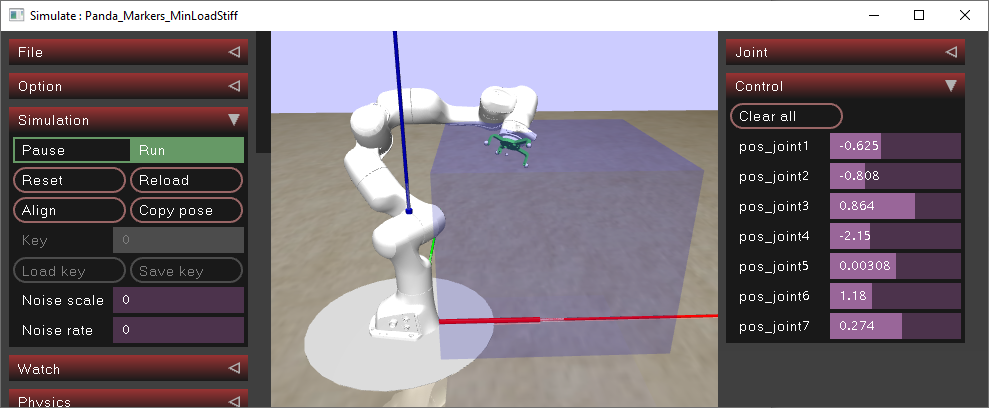

RobotBlockSet (RBS)—A Comprehensive Robotics Framework Proceedings Article

In: Pisla, Doina; Carbone, Giuseppe; Condurache, Daniel; Vaida, Calin (Ed.): Advances in Service and Industrial Robotics, pp. 439–450, Springer Nature Switzerland, Cham, 2024, ISBN: 978-3-031-59257-7.

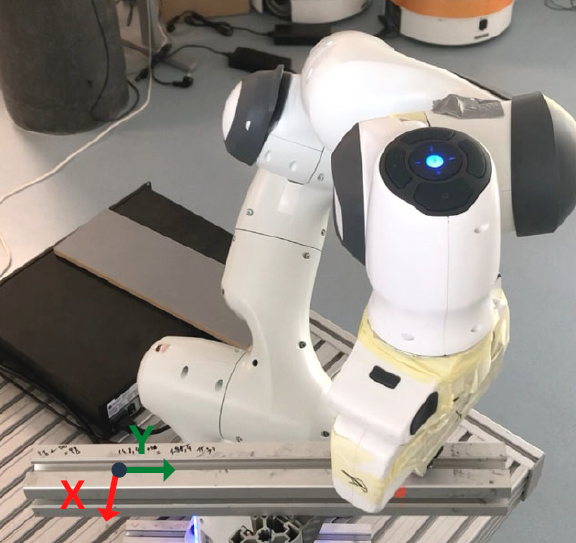

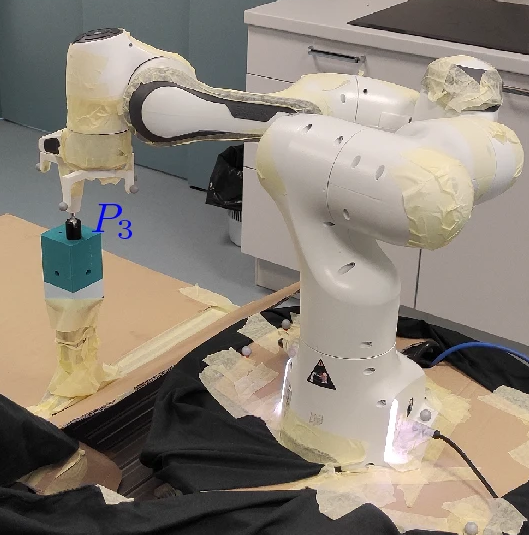

A Novel Approach Exploiting Contact Points on Robot Structures for Enhanced End-Effector Accuracy Proceedings Article

In: Pisla, Doina; Carbone, Giuseppe; Condurache, Daniel; Vaida, Calin (Ed.): Advances in Service and Industrial Robotics, pp. 329–336, Springer Nature Switzerland, Cham, 2024, ISBN: 978-3-031-59257-7.

Optimizing Robot Positioning Accuracy with Kinematic Calibration and Deflection Estimation Proceedings Article

In: Petrič, Tadej; Ude, Aleš; Žlajpah, Leon (Ed.): Advances in Service and Industrial Robotics, pp. 255–263, Springer Nature Switzerland, Cham, 2023, ISBN: 978-3-031-32606-6.

Partners

JSI Team

| Members | COBISS ID | Role | Period |

| Petrič Tadej | 30885 | PI | 2022- 2025 |

| Leon Žlajpah | 03332 | Researcher | 2022- 2025 |

| Brecelj Tilen | 37467 | Researcher | 2022- 2025 |

| Mišković Luka | 54681 | Junior Researcher | 2022- 2025 |

| Kropivšek Leskovar Rebeka | 53766 | Technician | 2022- 2025 |

| Simon Reberšek | 39258 | Technician | 2022- 2025 |

Founding source

ARRS grant no.: N2-0269